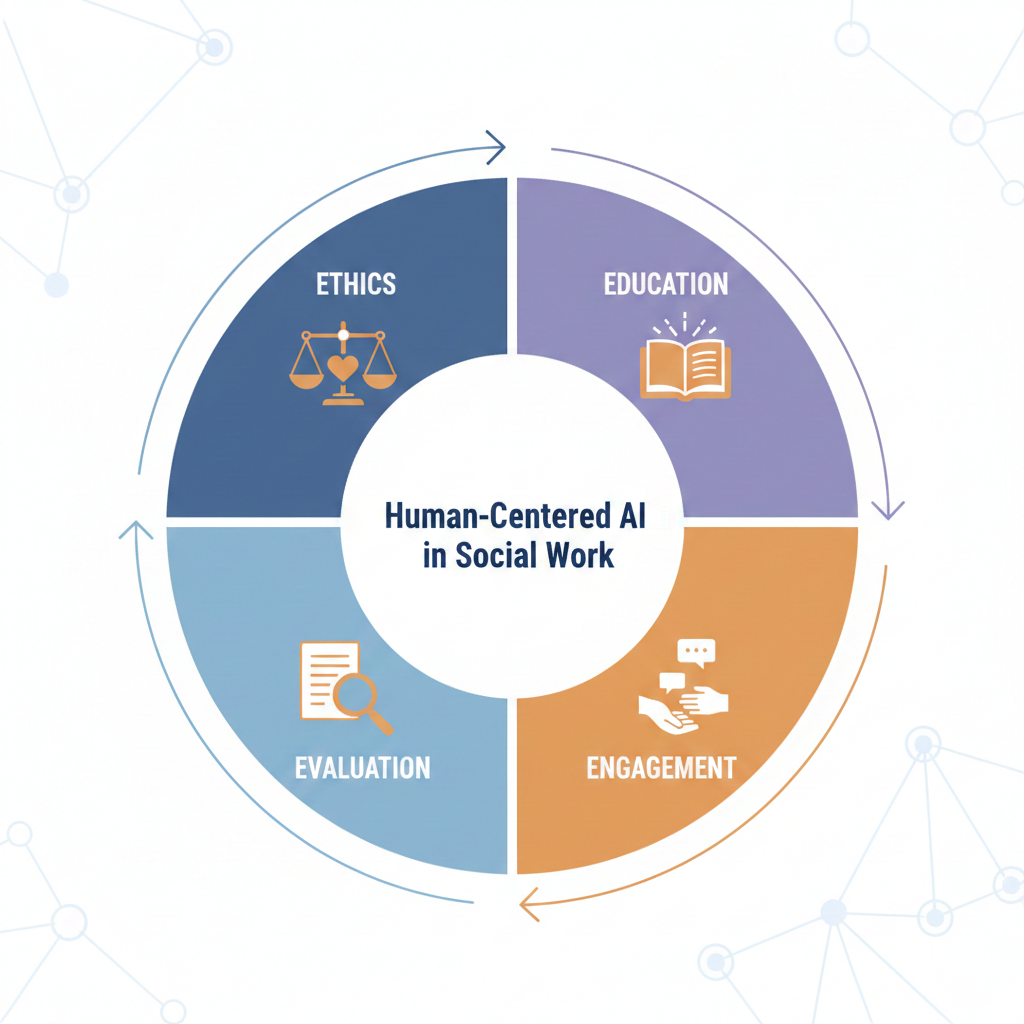

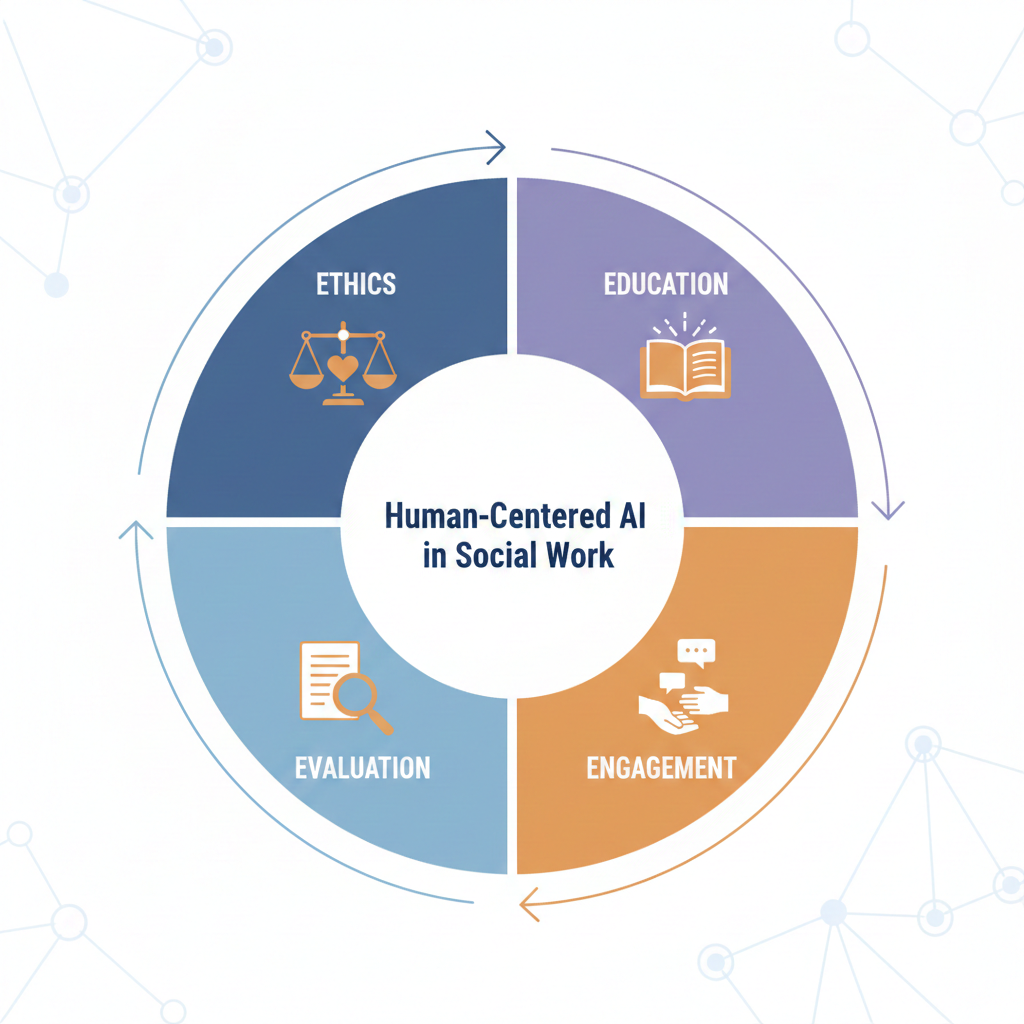

The 4 E’s of an AI Fluency Framework for Social Workers

The 4 Es of AI Fluency for Social Workers

A Human-Centered Framework for Ethical Practice

As artificial intelligence becomes increasingly embedded in social work education, healthcare systems, schools, nonprofit organizations, and private practice, conversations about AI fluency are no longer optional. Social workers are being encouraged—and in some cases required—to adopt new documentation systems, use predictive analytics tools, rely on automated scheduling platforms, or experiment with generative AI for drafting reports and treatment plans.

At the same time, there is often little guidance tailored specifically to social work about how to integrate these tools responsibly.

In technology and business settings, fluency often means speed, efficiency, productivity, and competitive advantage. In social work, fluency must mean something different. It must prioritize ethics, professional judgment, client dignity, equity, and accountability. It must strengthen—not weaken—the relational foundation of practice.

The 4 Es of AI Fluency for Social Workers offer a practical, values-aligned framework for navigating this moment with clarity and confidence. Rather than centering technical mastery, this model centers ethical reasoning, critical thinking, and intentional use across micro, mezzo, and macro practice contexts

Why Social Work Needs a Values-Based AI Fluency Framework

Most AI literacy frameworks emphasize learning tools: how to write prompts, which platforms are most powerful, how to automate workflows, or how to increase efficiency. While these skills may have practical value, they are not sufficient for a profession grounded in ethical responsibility and social justice.

Social workers make decisions that affect access to services, custody arrangements, clinical diagnoses, safety planning, educational supports, benefits eligibility, and more. When AI tools influence documentation, assessments, referrals, or organizational decisions, they introduce new layers of power, opacity, and risk.

Without a profession-specific framework, social workers risk:

Over-relying on automated outputs

Assuming neutrality where bias exists

Allowing efficiency pressures to override ethical reflection

Integrating tools without informed consent processes

Deferring to systems without understanding their limitations

A values-based AI fluency framework ensures that technological competence never outpaces ethical accountability. The 4 Es model begins with ethics and builds outward—distinguishing knowledge from judgment and intentional use from default adoption. Ethics: The Foundation of AI Fluency in Social Work

Ethics: The Foundation of AI

Ethics is not one component of AI fluency—it is the foundation.

In social work, ethical decision-making must precede tool adoption. The question is not simply “Can I use this AI system?” but “Should I use it in this context?” and “How does its use align with my obligations to clients, communities, and the profession?”

Ethical AI fluency includes:

Protecting client confidentiality and understanding data storage risks

Securing informed consent when AI tools are used in care or documentation

Maintaining transparency about how information is generated

Avoiding over-automation in sensitive assessments

Considering power dynamics when AI systems influence decision-making

For example, if a social worker uses generative AI to draft a progress note, ethical fluency requires reviewing the content carefully, ensuring it accurately reflects the client’s voice, and verifying that no confidential information was entered into an unsecured platform. If an agency implements predictive analytics to flag “high-risk” families, ethical fluency requires interrogating how those risk scores are generated and whether they disproportionately impact marginalized communities.

Ethics in AI use is not a checklist completed once during implementation. It is continuous ethical reasoning. Tools evolve. Data shifts. Organizational pressures change. Social workers remain accountable for outcomes, even when technology is involved.

Education: Understanding AI

The second E, Education, emphasizes foundational understanding rather than technical specialization.

Social workers do not need to become data scientists or software engineers. However, they do need enough knowledge to engage AI systems critically and responsibly. Without basic understanding, it becomes difficult to evaluate risks, challenge inappropriate applications, or advocate effectively for clients.

Educational fluency includes understanding that:

AI systems are trained on large datasets that reflect historical patterns

Outputs are probabilistic predictions, not objective truths

Bias in training data can result in biased outputs

Generative AI can fabricate information confidently

AI tools do not “understand” context or lived experience

When social workers understand these core realities, they are less likely to anthropomorphize systems or assume objectivity. They are better positioned to explain AI use to clients transparently and to push back against unrealistic claims made by vendors or administrators.

Education also involves recognizing the broader systems context: Who designed the tool? What data was used? Who benefits from its adoption? Who may be harmed? What regulatory protections are in place—or absent?

Educational fluency fosters discernment. It allows social workers to engage technology without being seduced by efficiency narratives or intimidated by technical complexity.

Evaluation: Critical Review

Evaluation is where AI fluency becomes visible in everyday practice.

AI-generated summaries, recommendations, diagnostic suggestions, or documentation drafts should never be treated as final decisions. They are starting points that require human interpretation. Evaluation is not an optional extra step; it is a professional responsibility.

Evaluative fluency includes:

Checking AI outputs for factual accuracy

Identifying potential bias or stereotyped language

Ensuring contextual relevance

Adjusting tone and framing to preserve client dignity

Removing fabricated citations or incorrect information

For instance, if AI generates a treatment plan goal, the social worker must ask: Does this align with the client’s expressed priorities? Does it reflect strengths? Does it incorporate cultural context? If an AI system flags a client as “high risk,” evaluative fluency requires questioning how that determination was made and whether it aligns with clinical judgment.

Importantly, errors in AI systems are not evenly distributed. Marginalized communities often bear disproportionate risk due to biased data patterns. Evaluation must therefore include a justice lens, asking how outputs might differentially affect clients based on race, disability, immigration status, language, gender identity, or socioeconomic position.

Evaluation is the bridge between technical output and ethical action. Without it, automation becomes abdication.

Engagement: Intentional Use and Boundary Setting

Engagement addresses how AI is actually used in practice. Fluency does not mean universal adoption. It means intentional integration with clear boundaries.

In many cases, AI can reduce administrative burden, support drafting, summarize policies, or assist with brainstorming. Used thoughtfully, it may decrease cognitive overload and allow social workers to focus more energy on relational work.

However, engagement requires clarity about where AI should not be used. Areas that require nuanced clinical judgment, trauma-informed assessment, crisis intervention, or culturally sensitive dialogue may not be appropriate for automation.

Intentional engagement includes:

Defining clear use cases

Establishing boundaries around client data input

Creating supervision conversations about AI use

Developing agency policies grounded in ethical standards

Knowing when to opt out

Engagement also requires maintaining the centrality of human connection. AI can assist with documentation. It cannot replicate empathy. It can draft summaries. It cannot build trust.

In social work, relational presence remains foundational. AI must remain a support tool—not a relational substitute.

Why the 4 Es Framework Works Across Social Work Roles

One of the strengths of the 4 Es framework is its adaptability across professional levels and practice settings.

Students can use the framework to build ethical habits early in their training. Rather than learning AI as a shortcut, they learn it as a tool that requires reflection and accountability.

Practitioners can apply the framework to real-world decisions: Should I use AI for this documentation task? How do I evaluate this output? How do I explain this to my client?

Supervisors can incorporate the 4 Es into reflective supervision, helping supervisees explore ethical tensions, cognitive biases, and boundary-setting strategies related to AI use.

Organizational leaders can use the framework to guide policy development, vendor selection, and staff training. Instead of adopting tools based solely on cost or efficiency, they can assess alignment with professional standards and equity commitments.

Because the 4 Es focus on thinking rather than specific platforms, the framework remains relevant even as technologies evolve. It provides a stable ethical anchor in a rapidly changing landscape.

AI Fluency as a Professional Responsibility

AI fluency is about preserving professional integrity under new conditions with AI technology.

The social work profession has adapted before—to telehealth, electronic health records, and digital communication. Each shift required renewed attention to ethics, confidentiality, and boundaries. AI is another inflection point, but it is not fundamentally different in one key respect: human accountability remains central.

The 4 Es of Ethics, Education, Evaluation, and Engagement offer a structured way to integrate AI without compromising what makes social work distinct.

Ethics ensures alignment with our values.

Education builds informed discernment.

Evaluation safeguards against harm.

Engagement protects intentionality and relational integrity.

AI should strengthen social work thinking, not replace it. When fluency is grounded in ethics and critical reflection, technology can support our work without redefining our professional identity.

The future of AI in social work will not be determined by the tools alone. It will be shaped by how thoughtfully, ethically, and intentionally social workers choose to engage them.